The question of 'why lexical?' needs addressing at two levels. Firstly, why choose simplification given the wide scope of natural language processing applications to which I could have committed four years of my life? Secondly, why lexical simplification given the wide range of simplification research out there? I'll answer these in turn.

Why Simplification?

Let me start with a confession. I didn't start a PhD to study lexical simplification. I didn't even come with natural language processing in mind. About four years ago I was offered an opportunity to study at Manchester on the newly founded Centre for Doctoral Training (CDT). One of the aspects of the CDT programme which appealed to me was the fact that students were not initially tied to a supervisor or research group. We were in effect free for our first six months to decide upon a group, supervisor and topic.

During the first six months, I took three masters modules. Two on machine learning and one on digital biology. Both fascinating fields, and both of which have ample opportunities for a PhD. I spoke to the relevant people about these opportunities, but I just couldn't find something that inspired me. Resigned to the fact that I may have to choose a topic and wait for the inspiration to come I started to focus on the machine learning research.

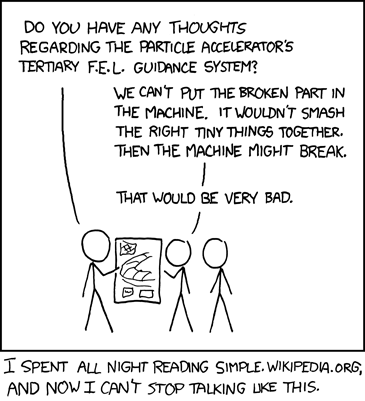

We had weekly seminars to acquaint us with the research in the school. Each seminar was given by a different research group. They varied in style and form - from the professor who brought in props to talk us through the history of hard drives to the professor who promised free cake every time their group had a major publication. One week, it was the turn of the text mining group. During the presentation the idea that text mining could be used to make difficult documents easier to understand was mentioned. I took the bait, started reading papers, emailed my prospective supervisor and from there I was away. The spark of inspiration drove me to develop my first experiments, which led to a literature review and an initial study on complex word identification.

Simplification is a great field to be working in. I like that the research I'm doing could improve a user's quality of life. Ok, the technology isn't quite there yet, but the point of research is to reach out to the unattained. To do something that hasn't been done before. There are lots of opportunities in simplification. Lots of unexplored avenues. I'm going to write a long future work section in my thesis (maybe a future post?) because there is a lot to say.

Why Lexical?

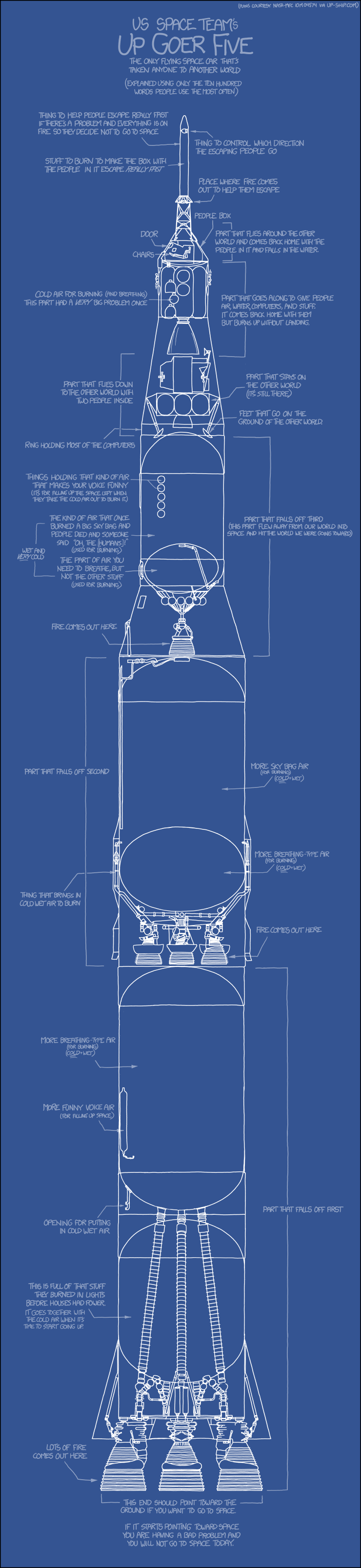

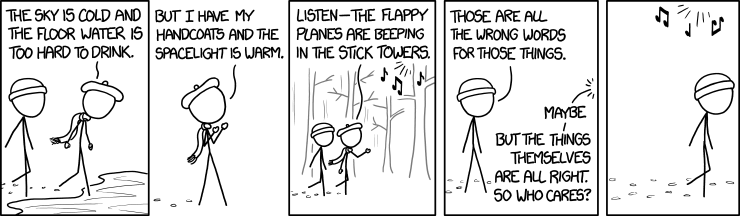

When I started looking into simplification back in 2011, there were three main avenues that I could discern from the literature. Firstly, syntactic simplification - automated methods for splitting sentences, dealing with wh- phrases and the passive voice. Secondly, lexical simplification - the automatic conversion of complex vocabulary into easy to understand words. Finally, semantic simplification - taking the meaning into account and doing some processing based on this. I grouped both lexical elaboration and statistical machine translation under this category, although I would probably now put elaboration under the lexical category.

I felt that my research would be best placed if it fell underneath one of those categories. Although I could see viable research options in all of these, my background in machine learning and data mining was best suited for lexical simplification. This was also around the time that the SemEval 2012 lexical simplification task was announced. This task gave me an intial dataset, my background in AI gave me a set of techniques to apply. From there I ran some initial experiments, implemented a basic system and started learning the complexities of the discipline.

It's strange to think that I'm writing about a decision I made several years ago. I've tried to be as honest as I can remember in this post. The PhD has not been a particularly easy road, but it has been a good one so far.